I’m only including this example because it was so outrageous! A government agency was investigating various hardware platforms for (what I guess was) HPC cryptographic applications. Obviously, they were not sharing any of their actual code, but instead specified a number of number crunching challenges for large scale multiprocessors. I took up the challenge of Solitaire with a 16-way E6000 and more than 8GB of RAM.

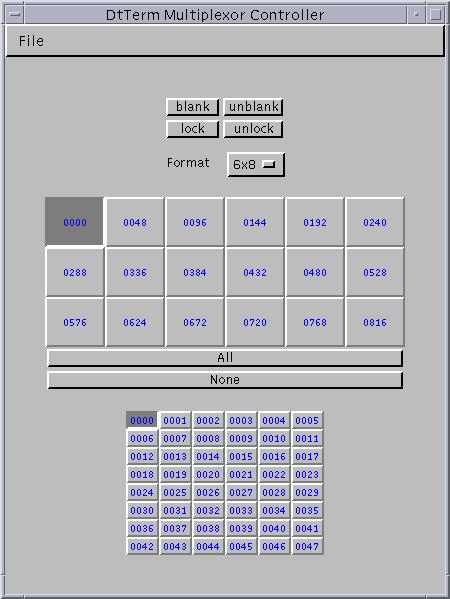

o o o * * * o o o 5 6 7 5 6 7

o o o * * * o o o 2 3 4 2 3 4

o o o o o o o * * * * * * * o o o o o o o 0 1 7 4 0 1 0 2 5

o o o o o o o * * * o * * * o o o * o o o 6 3 1 x 1 3 6

o o o o o o o * * * * * * * o o o o o o o 5 2 0 1 0 4 7

o o o * * * o o o 4 3 2

o o o * * * o o o 7 6 5

Fig.1 Fig.2 Fig.3 Fig.4 Fig.5

In Figs.1-3 a “o” represents a hole, and a “*” a marble in a hole. Thus, Fig.1 shows the empty board, Fig.2 the staring position (32 marbles), and Fig.3 the target end position. My solution was to encode each board position as 33 bits, with 0 for a hole and 1 for a marble. Threads in a worker pool took known board positions from a work pile and found all possible news moves, which were then added to the work pile. Exploiting rotational and reflectional symmetry, each new board position becomes up to eight possible board positions.

By coding the 33 bits as shown in Figs.4-5 I was able to make rotation a simple byte swap. Reflection is harder, but only needs to be done once (since the remaining three reflections and be achieved by rotating the first reflection). The really extravagant part was adding an 8GB char array to record board positions that had already been seen (and which, therefore didn’t require to be explored again).

The result was a solution in just 2 seconds, with all possible board positions being found within 30 seconds. Sun hardware and my expertise in multithreading has moved on a lot in the intervening years. I’m now itching to try the exercise again on an T5220!